Tags

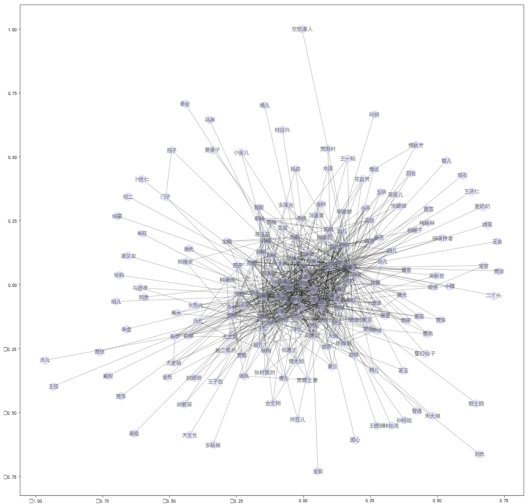

Summary: China Blockbuster War Movies become more and more popular in the global. The national movie frequently takes on the responsibility of representing the national identity to its citizens or even the world. How does China Blockbuster War Movies Capture China’s Nationalism? What is the opinion of the public? We used Douban as the source to extract data from the film reviews of two movies (“Operation Red Sea” and “Wolf Warrior” ) on the Douban, a popular art consumption and rating website, to figure out the thought of people behind the screen and the future development of Blockbuster War Movies in China. With the help of Python, we made a Wordcloud picture of the frequent words included in the 1,000 pieces of films reviews for each movie. We found that China’s new breed of patriotic hero on the big screen reflects the rise of a superpower and China’s War Movies is shaping the image of China.

Last month, movie tickets sales in mainland China hit 10.1 billion yuan ($1.6 billion), a box office world record for monthly sales in a single market. No film stood out more than “Operation Red Sea.”, which had grossed almost 2.5 billion yuan in just 13 days by the end of February.

“Operation Red Sea” is based on the Chinese navy’s March 2015 evacuation of Yemen. Set amid militant unrest in a fictional Middle Eastern country, it tells the stories of a ship’s crew and an assault team as they rescue Chinese citizens and foreign refugees, resolving a potential nuclear crisis along the way.

Similarly, last summer’s blockbuster “Wolf Warrior 2,” ($854 million), tells the stories of a loose-cannon Chinese soldier in an unnamed African country. “Operation Red Sea” tones down the patriotism of last year’s smash hit film ‘Wolf Warrior 2’, playing up gore over glorifying war.

How does China Blockbuster War Movies Capture China’s Nationalism? What are the opinion of the public in China and the globe? Can these films be a step towards making Chinese action films more palatable overseas? We will make some Analysis based on the data.

Summary: In this article, under the context of Hong Kong’s Mandatory Provident Fund scheme (MPF), we find that some Exchange Traded Fund (ETF) occupy large holdings in a particular MPF fund. By calculating the tracking errors over the past ten years, the year of 2008 stands out with the highest tracking error, which corresponds with the global financial crisis. In other years with more stable stock markets, ETF performs relatively well. We also calculate returns between two ETFs and the indexes they track respectively; the results turn out to be different.

Summary: In this article, under the context of Hong Kong’s Mandatory Provident Fund scheme (MPF), we find that some Exchange Traded Fund (ETF) occupy large holdings in a particular MPF fund. By calculating the tracking errors over the past ten years, the year of 2008 stands out with the highest tracking error, which corresponds with the global financial crisis. In other years with more stable stock markets, ETF performs relatively well. We also calculate returns between two ETFs and the indexes they track respectively; the results turn out to be different.